A Review Process

Whenever I talk about how we (and how I) think about reviews and feedback in the BuzzFeed Tech org, I'm struck by how many people outside our team and the company are surprised, with reactions ranging from "Oh that's interesting," to "OMG that seems so crazy." Since I arrived at BuzzFeed just over three years ago, I've spent a lot of time thinking about review processes. In my first year, I implemented a lightweight, quarterly review system for just the Product Design team (later reducing that to bi-annually). And for the past two years, I've been working on, evolving and administering both midyear and annual review processes for our entire Tech team (which includes IT, Engineering, Product and Project Management, etc.). With each feedback cycle, we've learned and evolved a lot, whether from passively watching how people approach reviews or through direct feedback either 1:1 or through surveys we send out to our team.

So, in the spirit of sharing what I've learned and where that's led me, here is an overview of our current system, how we've structured it and the philosophy behind it.

But First, Goals

Like any design, engineering or product problem, it would be a mistake to not approach reviews with any goals in mind. In the past, I've made the mistake of both assuming my instinct about the goals was correct without investigation, and also mistakenly assumed that my goals were shared by others participating in the process (it seems obvious now that these are bad moves, and for god's sakes I'm a designer so I should have known better, but alas). Here are the primary goals I've begun to more consciously refer to when considering changes to the review process:

If successful, the review process should...

- Provide a moment for self-reflection.

- Help people become better collaborators.

- Strengthen alignment between managers and their teams when it comes to leveling, goal-setting, etc.

- Cultivate a stronger, more candid culture.

This is not a complete list (there wind up being sub-goals for each of these), but this is approximately where I start from when I think about our process.

Written Reviews

When I first started at BuzzFeed, I noticed an interesting phenomenon when the designers I managed received peer reviews from their engineering or product counterparts. Frequently, non-designer peers would write something like, "Amelia is great to work with. While I don't feel qualified to evaluate her as a designer, I really like the work we do together and she's always on time with finishing her work." There were, hopefully obviously, a couple of problems with this. First of all, no one knew how to evaluate one another: do I try to evaluate my design counterpart as a designer? How do I even do that if that's not my area of expertise? Secondly, when people would then default to talking about their collaboration, the results were vague, lacking any detail or any sort of reflection on the values of our organization (which, to be fair, we hadn't established at that point).

So, stealing an idea from my time at Amazon, we drafted and rolled out Leadership Principles to our entire team. We then shifted the peer, self and manager review structure to consist of two questions:

- What 1-2 Leadership Principles do you feel this person has embodied over the past six months? Provide specific projects and examples to support your review.

- What 1-2 Leadership Principles should this person focus on in the coming months? Again, provide specific examples to support your review.

This alone completely changed the quality of most of the reviews, at least according to the people who've received them. Giving people a sense of what our organization values, and using those values as a rubric, it turns out, takes away the typical Blank Page stress most people felt when staring down writing a review for themselves, their peers or their teams.

Direct Feedback

This is the part where I've had people gasp, tell me I'm crazy and then list all the ways they think it could go wrong. In the beginning our peer reviews worked a lot like other companies': people would write a co-workers review and submit it to their peer's manager. The manager would collect all the peer feedback, and then spend hours anonymizing and rewriting it into a comprehensive review without any identifying information (as much as possible). Then they'd deliver their collated review to the person they manage, being careful not to reveal any identities unless they'd previously been given permission to do so by those who wrote the reviews.

A year ago, we ended that practice and instituted completely non-anonymous peer reviews. Now, we still have peers write their reviews and submit them to their coworker's manager. But now, managers compile all the reviews as written and with names attached into one large Google Doc, and share that, along with their own written review, with the person being reviewed.

Here are some of the questions I've received when talking about this:

What if someone just absolutely torches someone else in a review?

A lot of people think this is going to happen, but to be totally honest, I've yet to see or hear of this happening. And if it did, this is why we still have people send their reviews to their peer's manager first. It gives managers a chance to read over feedback, gut-check for tone, ask for more examples if necessary, etc. In short, we have an editing layer for this very purpose.

What if someone has a super serious complaint about a person they're reviewing?

If someone has a problem with a coworker that's so serious they're afraid to confront the person about it directly, we don't want them to wait for a review cycle! We encourage our teams to talk to their manager, their peer's manager, HR or a manager in the org they trust as soon as possible if something is so egregious they feel uncomfortable putting it down for their coworker to read.

Won't this make people write less useful, watered-down feedback?

I've honestly been pleasantly surprised that the answer to this has been mostly no. Like I mentioned, we do have an editing step where managers reach back out for more detail if they need it (which I do wind up doing a small percentage of the time). But folks on our team seem comfortable enough to be radically candid with each other. Additionally, because peer reviews are delivered directly, there's more incentive to write meaningful feedback (you don't want your coworker to see you wrote a one-line "This person is cool to work with" review of them).

What's been really amazing about this is what it's done for review conversations. Before, I or another manager would find ourselves delivering an overly-vague review to someone who could probably figure out who/what we were talking about no matter how well we'd tried to conceal the identities of their reviewers. The conversations were always stilted, with people yearning for more direct feedback and more specific examples of how their work or working style impacted a teammate.

Now, I start all my review conversations the same way: I send out all the reviews a day or two before the review convo, giving people a chance to read through them. And then when we do meet, I lead off with, "So, what were the highlights of the review for you? Anything surprising? Totally expected? Anything interesting?" This puts people in the driver's seat for their own reviews. It's not unusual for people on my team to even come with notes prepared from reading their reviews.

What's more, it's becoming more and more common that someone on my team responds to a peer review with, "I know exactly what this person is talking about. I'm going to talk to them, because I felt the same way, but wasn't sure what to do about it at the time." Then, on their own, they engage in conversation with their peers to further understand the feedback, get further clarity on how to address the feedback moving forward, and/or level-set on how they could work better together. In the eleven years I've worked in technology, I've never seen anything like that. It's magical.

Level Calibration

This is something that is currently unique to the Product Design team. In the past, I've shared our Role Documentation, which we iterate on each year (after the annual review cycle, conveniently enough). After implementing the review process above, the one goal I felt we were falling short on was ensuring our managers and designers were aligned on their skill development as it related to our role documentation. So, for the past couple of years, we've been doing what we call "Calibration."

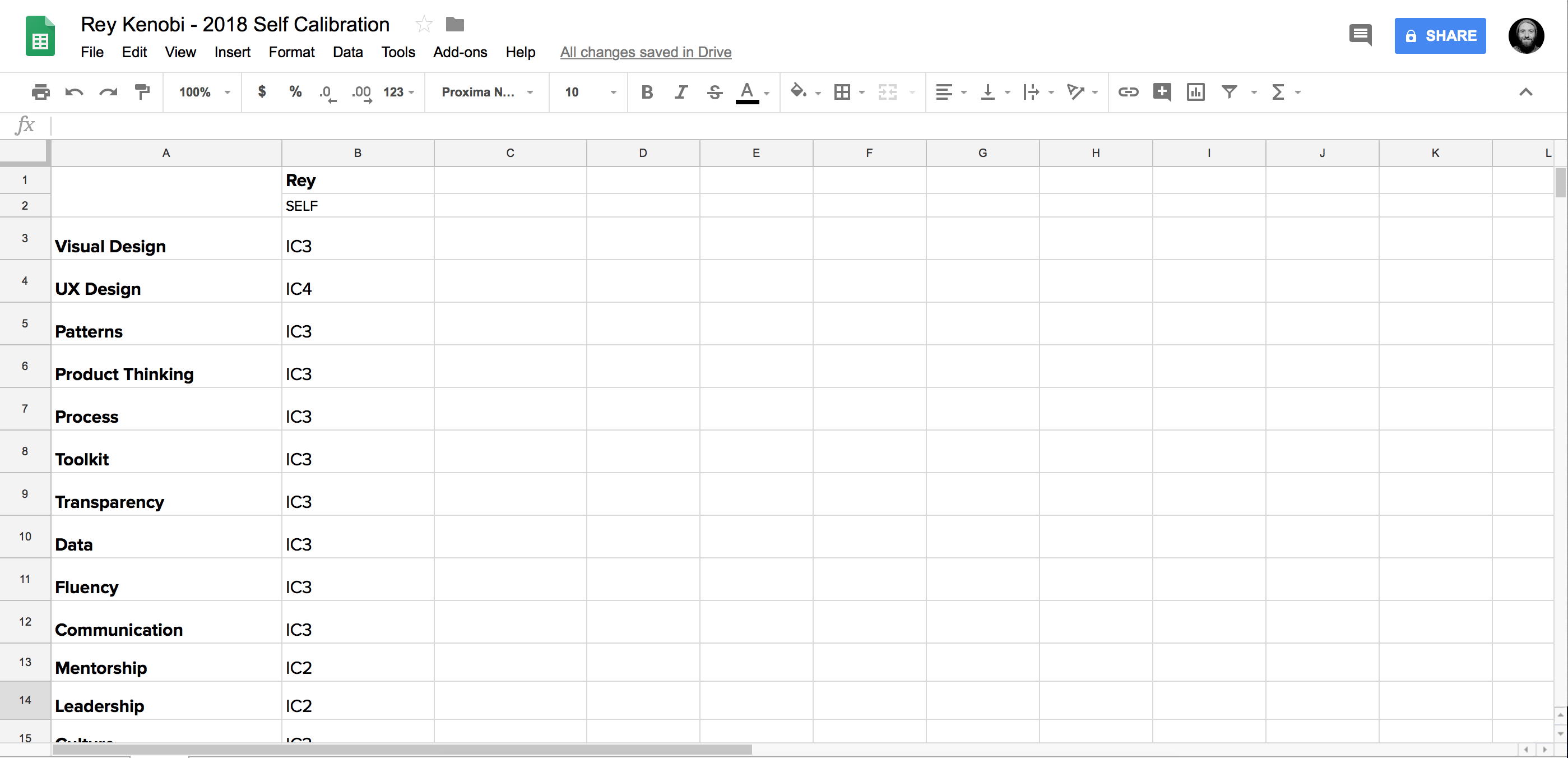

To start, we create a spreadsheet for each person on the Product Design team, which lists out the categories from the role document (contributors get those categories, managers get the manager ones). Then, each person on the team, using the role document as a guide, reviews themselves, inputting what level they believe they are based on our documentation and their understanding of their own performance. It winds up looking something like this:

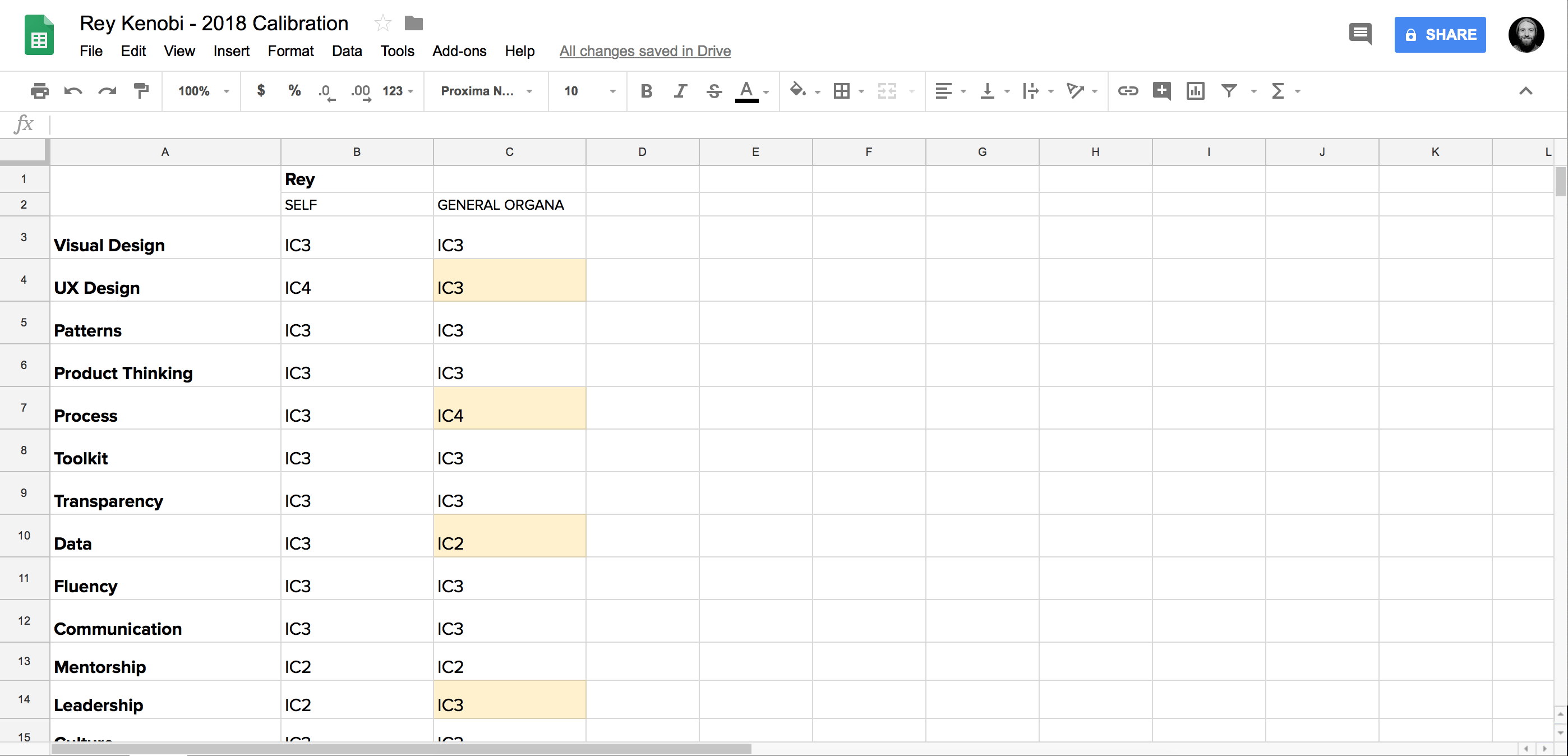

Simultaneously, their manager fills out a similar sheet, inputting which skill level they think their team member is at for each category. Then, they combine the two sheets and highlight any discrepancies between the manager and the person they manage:

Then they talk through this document as part of their review conversation and, for folks attempting to level up in certain areas or into a new role, we use these calibrations to help guide what goals we set together. Where there's disagreement, it's obvious (it's in the doc!) and an open discussion about how to align on each category. It's not uncommon for me to start out with one idea and be convinced that I was wrong and forgetting some important information. It's also, believe it or not, common for managers to rate their team members higher than their team rates themselves. It's always a nice moment to be able to tell someone you think they're performing even better than they thought they were and why.

These Calibrations also help point out holes in our role documentation. In fact, many of the iterations we make on our docs are a direct result of Calibration conversations revealing that our documents could be more clear, or that a skill we thought was important at one time in our org doesn't make sense at all anymore for our designers. It's been incredibly impactful as a tool, and demystified for our team how their own thoughts about their performance might diverge from their manager's.

It's a Process

Of course, there are still ways to improve what we're doing. I like to think of blog posts like this as a freeze frame of a moment in time, a crystallization of a thought or process that we can look back on in a year or two and see just all the ways we've shifted and changed since then. Will we find new goals to pursue? Will something about our Principles or Calibration exercises break down as the team and organization grow?

Time will tell. For now, I'm just personally grateful that our Tech department is so willing to try new things, to ask hard questions and provide their own perspectives and feedback (last time we did this an engineering manager sent me a doc of many bullet points based on feedback he'd gotten from his team, which caused us to seriously look at editing our Leadership Principles for the first time since their inception). Because any process, no matter how well-conceived and considered, is worth nothing without the people.

PS: I'd be remiss if I didn't note that while I've historically been responsible for the format, I've received a metric ton of help and support in the implementation and execution of all of this (thanks Design Managers, Amy, Luke, Alyssa and Michelle!)

PPS: Both the Leadership Principles and Role Documentation linked in the article are free to use! Steal 'em, fork 'em, make 'em your own!